Tags

Providing a suitable High Availability (HA) solution for each database system is one of the challenging tasks for a DBA and here we have to answer some questions like the following ones:

- What is the cost for that HA solution?

- Is it required to change the system structure or the DB design to use that HA solution?

- Is it complicate to understand, use or maintain ?

Choosing the suitable HA solution for each system will depend on the answers of such questions …

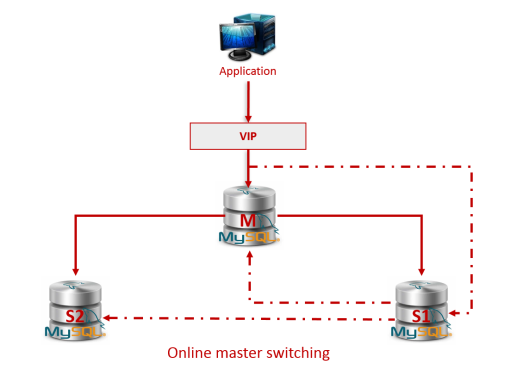

In this post, I’m going to write about MySQL Master High Availability MHA as a nice tool which provides HA for MySQL by performing automatic fail-over or fast online master switching with almost no downtime!

Before going through more details about MHA, let’s first answer the previous questions:

- MHA is a free opensource tool, no cost to use it in your system and even no additional servers are required.

- No system changes or DB redesign are required to use MHA, keep your system as it is and just use the tool! (Assuming that MySQL replication is already being used).

- MHA is easy to implement and its usage is simple.

Overview

As I mentioned above, MHA provides two main benefits:

- Automatic fail-over: which is helpful for the unexpected master failure, crash, … etc. (the fail-over can be done also manually).

- Fast online master switching: which is helpful when performing the regular planned or scheduled maintenance operations like kernel updates, MySQL upgrade, HW upgrade, … etc.

If we have 3 servers, master and two slaves (slave1 and slave2) and we want to perform a planned maintenance on the master, we will need to perform the following:

- Make sure that no slave lagging problem and all servers are at the same point.

- Promote one of the slaves (slave1) to be a new master.

- Point the other slave (slave2) to slave1 instead of master.

- Configure master to be slave for slave1 to get all updates which will happen during the maintenance.

- Stop MySQL service on master to perform the maintenance operation (kernel update “server restart”, MySQL version upgrade, .. etc).

- Start MySQL service on master and wait until getting all missing transactions from slave1.

- Promote back master to be the current master.

- Re-point slave2 to master again.

- Configure slave1 to be back as a slave for master.

It’s not as simple as it appears, especially, if classic replication is being used because we will need to get the matched binary log file name and position between the old master and the new one to be used on the slave(s) in the re-pointing step to the new master!

Also, it makes sense to use VIP on the top of the DB layer to be used by the application to avoid extra work from the application – or maybe downtime – during the fail-over process. So, we will need to do extra steps to remove/add the VIP from/to the demoted/promoted master!

MHA can make such process pretty simple and easy!!

Implementation of MHA (version 0.56 at the time of writing this post)

Installation:

MHA consists of two packages, node and manager:

- Node package: Should be installed on all DB servers (the master and the two slaves in this example).

- Manager package: Recommended to be installed on a separate server but it’s OK to install it on any one of them (preferred not the master in case of the master failed e.g. slave1).

Note:

- MHA packages can be downloaded from here.

- Some Perl dependencies are required (DBD::mysql on all servers while Config::Tiny, Log::Dispatch, Parallel::ForkManager and Time::HiRes are required on the manager).

Scripts preparation (on the manager):

- Create an application config file (check /sample/conf):

vi /etc/mha/app1.cnf[server default]

# mysql user and password for MHA (should be created on all DB servers)

user=mhauser

password=M0llP@ss

# Replication user password

repl_password=s3cret

ssh_user=root

# working directory on the manager

manager_workdir=/var/log/masterha/app1

# working directory on MySQL servers

remote_workdir=/var/log/masterha/app1

#In case of online master switching, the following script will be used

master_ip_online_change_script=/etc/mha/master_ip_online_change

#In case of automatic fail-over, the following script will be used

master_ip_failover_script=/etc/mha/master_ip_failover[server1]

hostname=master[server2]

hostname=slave1

#Setting higher priority of being the new master (not guaranteed if it was lagging too much)

candidate_master=1[server3]

hostname=slave2

#if this server should never be the master (diff data center, weak HW, … etc.)

no_master=1Notes:

- No need to specify which server is the master as MHA detects it automatically.

- candidate_master and no_master options are needed only in the fail-over as in the online master switching we will specify the new master host.

- Copy the scripts “master_ip_failover” (will be executed when performing the fail-over) and “master_ip_online_change” (will be executed when performing the online master switching) from /sample/scripts to “/etc/mha/” and make sure they are executable (you can copy only the one you plan to use).

- Add the VIP handling part in both scripts:

my $vip = '192.168.1.100'; # Virtual IP# A simple function that disables the VIP on the old_master (should be called when stopping the old master)

my $ssh_add_vip = "/sbin/ip addr add $vip/24 dev eth1";

my $ssh_del_vip = "/sbin/ip addr del $vip/24 dev eth1";

my $ssh_send_garp = "/sbin/arping -U $vip -I eth1 -c 1";

.

.

sub main {

if ( $command eq "stop" ) {

.

.

## Removing the VIP

print "Disabling the VIP on the old master if the server is still UP: $orig_master_host \n";

&del_vip();

print "DONE ... \n";

.

.

elsif ( $command eq "start" ) {

.

.

## Adding the VIP

print "Enabling the VIP - $vip on the new master - $new_master_host \n";

&add_vip();

print "DONE ... \n";

.

.

# A simple function that enables the VIP on the new master (should be called when starting the new master)

sub add_vip() {

`ssh $new_master_ssh_user\@$new_master_host \" $ssh_add_vip \"`;

`ssh $new_master_ssh_user\@$new_master_host \" $ssh_send_garp \"`;

}

sub del_vip() {

`ssh $orig_master_ssh_user\@$orig_master_host \” $ssh_del_vip \”`;

}

Environment preparation:

- Create MySQL user on all DB servers to be used by MHA:

GRANT ALL ON *.* TO 'mhauser'@'%' IDENTIFIED BY 'M0llP@ss'; - Create the replication user on the candidate-master server (slave1 in this example):

GRANT REPLICATION SLAVE ON *.* TO 'repl'@'%' IDENTIFIED BY's3cret'; - Generate ssh key on all servers if it was not already generated:

ssh-keygen -t rsa - Allow connections between all servers using the ssh keys without prompting for passwords (passphraseless login):On the master:

root@master:/# ssh-copy-id root@slave1And do the same on slave1 and slave2 …

root@master:/# ssh-copy-id root@slave2

root@master:/# ssh-copy-id root@master ## Self referential is necessary!! - Check from the manager (“slave1”) if SSH settings are correct or not:

masterha_check_ssh --conf=/etc/mha/app1.cnf

The message “All SSH connection tests passed successfully” should be printed at the end, otherwise, something wrong should be fixed first. - Check if the replication health is OK or not (from the manager also):

masterha_check_repl --conf=/etc/mha/app1.cnf

The message “MySQL Replication Health is OK” should be printed at the end, otherwise, something wrong should be fixed first. - Add the virtual IP on the master to be used by the application (if it was not already added):

ip addr add 192.168.1.100/24 dev eth1

Now we are ready to perform the fail-over 😉

Perform the fail-over:

Automatic fail-over:

- To perform the fail-over automatically, the manager should monitor the master. Use the following command to do so:

slave1# nohup masterha_manager --conf=/etc/mha/app1.cnf /var/log/masterha/app1/app1.log 2>&1 & - To check if it is monitoring or not, execute the following command:

slave1# masterha_check_status --conf=/etc/mha/app1.cnf

Once the manager failed to connect to the master three times (you might need to configure secondary_check_script to make sure it is not a network issue) it will change the master automatically and stop monitoring.

Notes:

- shutdown_script should be used to power-off the master to make sure it is really down and to avoid split-brain.

- If you want to stop monitoring the master for any reason, use the following command:

masterha_stop –conf=/etc/mha/app1.cnf

Manual fail-over:

Sometimes, automatic fail-over is not preferred, so we can do it manually once the master failed using the following command (detecting the master failure is our responsibility!):

masterha_master_switch --master_state=dead --conf=/etc/mha/app1.cnf --dead_master_host=master

Online master switching (planned maintenance):

To perform online master switching for maintenance purpose, just use the following command:

masterha_master_switch --master_state=alive --conf=/etc/mha/app1.cnf --new_master_host=slave1 --orig_master_is_new_slave

Notes:

- It is recommended to double check the SSH connectivity and replication health also directly before switching the master using

masterha_check_sshandmasterha_check_replscripts. - MHA will execute “

FLUSH TABLES WITH READ LOCK;” after freezing the writes on the master. - Using

--orig_master_is_new_slaveoption will make the master slave for the new master after the switch process.

Common issues and useful tips:

SSH checking is not successful!!

- Host-names with its IPs are added to /etc/hosts ??

- Remote root login is permitted ?? “PermitRootLogin yes” in /etc/ssh/sshd_config (sshd should be restarted after that change)!

- Don’t forget the self referential!!

Replication checking is not successful!!

- Binary logging is not enabled on the candidate-master slave?!

- Binary logging is enabled on any of the slaves but the replication user is not created there ?? MHA will consider any slave having bin_log enabled as a candidate master in the replication checking. So, either we have to disable bin_log on all slaves except the candidate master or create the replication user on all slaves having bin_log enabled.

- Filtration rules are different on the slaves? It should be the same on all slave servers!

- binlog path is different? consider adding

master_binlog_dirin app1.cnf! - Same with pid file path, mysql port, mysql client bin dir, … etc. if they are in different paths (full parameter list can be checked out here).

- Master/Master in active/active setup? (one of them should be read_only as only one active is allowed “active/passive”).

- MySQL user for MHA ? not enough privileges!!

Slaves can’t connect to the new master!

The replication user does not exist on the candidate master ?

Changing the VIP to the new master is not being reflected immediately to servers in other networks!!

“Gratuitous ARP” need to be sent after adding the VIP on the new master to update the arp table!!

arping -U $vip -I eth1 -c 1

One manager can manage many systems!

I.e. app1, app2, … etc. and it is recommended to have one manager per data-center.

Examples of who uses MHA on productions:

Conclusion

- MHA implementation in short:

- Install node package on all MySQL servers.

- Install manager package on the manager.

- Create the needed scripts (app1.conf, master_ip_failover, .. etc.).

- masterha_check_ssh –conf=/etc/mha/app1.cnf

- masterha_check_repl –conf=/etc/mha/app1.cnf

- masterha_manager for fail-over or masterha_master_switch for master switch.

- That’s it !!!

- Having your system high available with no additional costs, no system or DB redesign and not in a complex way is possible with the opensource tool, MHA!

- Finally, thanks to Yoshinori Matsunobu for creating such wonderful tool!!

So, what is the mhauser needed? According to the mha official site, there is no mention of creating a mhauser.

LikeLike

Sven,

A MySQL user for MHA (here I called it “mhauser”) is needed for the followings:

1- Checking the replication status (slaves are connected/started or not and maybe checking binlog is enabled in the candidate-master or not).

2- Issue “FLUSH TABLES WITH READ LOCK” and marking the master as read_only in case of the manual fail-over.

3- Kill the current transactions on the master – if not yet finished before the allowed time – before the failing over.

4- Check and apply the relay logs on the slaves if they were not yet replayed.

5- Issue the “CHANGE MASTER TO …” command on all slaves – maybe the old master as well – after promoting a new master.

LikeLiked by 1 person

Hi,

Is MHA compatible with MariaDB ?

LikeLike

Yes, MHA 0.56 is compatible with MariaDB!

Although it supports MySQL GTID but it does not support MariaDB GTID as it has different implementation.

LikeLiked by 1 person

Hello.

I’m using MHA 0.57 with GTID based replication. If I use masterha_check_repl, mha says that all SSH checks will be skipped but in the next line it says that it will check SSH publickey authentication settings on the master. Why is that? Is SSH needed in GITD based failover scenarios?

LikeLike

Hello, How do I implement this setup with HA Proxy. Or any other load balancer

LikeLike

Generally, you can do the load balancer changes instead of the VIP parts in the MHA scripts but it depends on the Load Balancer you are using. E.g. in HAProxy, you can add a second server for the write requests as a backup to be used by default when the main master fail. In that case, MHA should be configured only to restructure the replication.

LikeLike

I believe there are 3 main users in the MHA setup and the mhauser’s role has been clearly defined here by Moll. What about the ssh_user and which user does the ip vip failover because I can into a situation where the vip would not move when the ssh_user was not root but had sudo privileges. I ended up with Permission denied.

LikeLike

The ssh_user should be able to execute /sbin/ip and /sbin/arping commands if you are not using the root. E.g. edit the /etc/sudoers file:

non_root_user ALL=NOPASSWD: /sbin/ip, /sbin/arping

LikeLike

Hello,

I have observed that during a manual switch master_ip_failover_script is not called. However, VIP failover works just fine during automated failover. Is this by design? If so, why? If not, can I activate VIP failover some how?

Thanks,

Suresh

LikeLike

Suresh,

When you do a manual master role switching the master_ip_failover_script is not being executed but master_ip_online_change!

Please refer to “Scripts preparation (on the manager)” section, point No. 2 in this post:

“Copy the scripts “master_ip_failover” (will be executed when performing the fail-over) and “master_ip_online_change” (will be executed when performing the online master switching) from /sample/scripts to “/etc/mha/” and make sure they are executable (you can copy only the one you plan to use).”

LikeLiked by 1 person

Hi Moll,

Thanks a lot for the tip. I will try that out.

Cheers,

Suresh

LikeLike

I’m using MariaDB 10.1.21, with the mha 0.56. I tried everything, and it showing the below error.

Fri Jul 14 06:18:52 2017 – [error][/usr/share/perl5/vendor_perl/MHA/ServerManager.pm, ln492] Server 10.16.14.224(10.16.14.224:3306) is dead, but must be alive! Check server settings.

Fri Jul 14 06:18:52 2017 – [error][/usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm, ln424] Error happened on checking configurations. at /usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm line 399

Fri Jul 14 06:18:52 2017 – [error][/usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm, ln523] Error happened on monitoring servers.

Fri Jul 14 06:18:52 2017 – [info] Got exit code 1 (Not master dead).

MySQL Replication Health is NOT OK!

Can you help on fixing this?

ps: MySQL replication is working fine.

LikeLike

Ramesh,

It looks to me like the problem is related MHA can’t connect to that servers. Are you sure the MHA DB user can connect to that server from the MHA manager?

If you are using different settings than the defaults, like datadir, port, … etc. you should specify that in the MHA configs as well.

LikeLike

Is it possible for two mysql server one is master and second is slave ?

LikeLike

where we can download rpm for MHA. I was unable to locate mha 0.56 rpm for mariadb.

LikeLike

Looks like 0.56 is not there anymore!

the latest version on the mha site is now 0.55:

https://code.google.com/archive/p/mysql-master-ha/downloads

LikeLike

hi i’m using os ubuntu16.04 db mariadb 10.1.29 with the mha 0.55

I tried everything, and it showing the below error.

root@Mha:/# masterha_check_repl –conf=/etc/app1.cnf

Tue Nov 21 06:27:31 2017 – [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Tue Nov 21 06:27:31 2017 – [info] Reading application default configurations from /etc/app1.cnf..

Tue Nov 21 06:27:31 2017 – [info] Reading server configurations from /etc/app1.cnf..

Tue Nov 21 06:27:31 2017 – [info] MHA::MasterMonitor version 0.55.

Tue Nov 21 06:27:32 2017 – [error][/usr/local/share/perl/5.22.1/MHA/MasterMonitor.pm, ln386] Error happend on checking configurations. Redundant argument in sprintf at /usr/local/share/perl/5.22.1/MHA/NodeUtil.pm line 184.

Tue Nov 21 06:27:32 2017 – [error][/usr/local/share/perl/5.22.1/MHA/MasterMonitor.pm, ln482] Error happened on monitoring servers.

Tue Nov 21 06:27:32 2017 – [info] Got exit code 1 (Not master dead).

root@Mha:/# cat /etc/app1.cnf

[server default]

# mysql user and password

user=mhauser

password=12345

# working directory on the manager

manager_workdir=/var/log/masterha/app1

# manager log file

manager_log=/var/log/masterha/app1/app1.log

# working directory on MySQL servers

remote_workdir=/var/log/masterha/app1

[server1]

hostname=192.168.31.109

master_binlog_dir=/usr/local/mariadb/data

candidate_master=1

[server2]

hostname=192.168.31.110

master_binlog_dir=/usr/local/mariadb/data

candidate_master=1

[server3]

hostname=192.168.31.107

master_binlog_dir=/usr/local/mariadb/data

no_master=1

root@Mha:/# masterha_check_ssh –conf=/etc/app1.cnf

Tue Nov 21 07:26:03 2017 – [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Tue Nov 21 07:26:03 2017 – [info] Reading application default configurations from /etc/app1.cnf..

Tue Nov 21 07:26:03 2017 – [info] Reading server configurations from /etc/app1.cnf..

Tue Nov 21 07:26:03 2017 – [info] Starting SSH connection tests..

Tue Nov 21 07:26:04 2017 – [debug]

Tue Nov 21 07:26:03 2017 – [debug] Connecting via SSH from root@192.168.31.109(192.168.31.109:22) to root@192.168.31.110(192.168.31.110:22)..

Tue Nov 21 07:26:03 2017 – [debug] ok.

Tue Nov 21 07:26:03 2017 – [debug] Connecting via SSH from root@192.168.31.109(192.168.31.109:22) to root@192.168.31.107(192.168.31.107:22)..

Tue Nov 21 07:26:03 2017 – [debug] ok.

Tue Nov 21 07:26:04 2017 – [debug]

Tue Nov 21 07:26:03 2017 – [debug] Connecting via SSH from root@192.168.31.110(192.168.31.110:22) to root@192.168.31.109(192.168.31.109:22)..

Tue Nov 21 07:26:04 2017 – [debug] ok.

Tue Nov 21 07:26:04 2017 – [debug] Connecting via SSH from root@192.168.31.110(192.168.31.110:22) to root@192.168.31.107(192.168.31.107:22)..

Tue Nov 21 07:26:04 2017 – [debug] ok.

Tue Nov 21 07:26:05 2017 – [debug]

Tue Nov 21 07:26:04 2017 – [debug] Connecting via SSH from root@192.168.31.107(192.168.31.107:22) to root@192.168.31.109(192.168.31.109:22)..

Tue Nov 21 07:26:04 2017 – [debug] ok.

Tue Nov 21 07:26:04 2017 – [debug] Connecting via SSH from root@192.168.31.107(192.168.31.107:22) to root@192.168.31.110(192.168.31.110:22)..

Tue Nov 21 07:26:04 2017 – [debug] ok.

Tue Nov 21 07:26:05 2017 – [info] All SSH connection tests passed successfully.

vim /usr/local/share/perl/5.22.1/MHA/NodeUtil.pm

186 sub check_manager_version {

187 my $manager_version = shift;

188 if ( $manager_version < $MHA::NodeConst::MGR_MIN_VERSION ) {

189 croak

190 "MHA Manager version is $manager_version, but must be $MHA::NodeConst::MGR_MIN_VERSION or higher.\n";

191 }

192 }

Can you help on fixing this?

LikeLike